Photo by Jainath Ponnala on Unsplash

About a year ago I decided to setup a blog on a digital ocean server. I mostly did this to get back into the dev ops world since I mostly live as a programmer. I know these skillsets are somewhat related, but I've mostly focused on building coding skills that last 8 years with some occasional dabbling in docker. In this post I will walk you through how I configured my web server that runs a next.js blog.

Some elements that make my setup really cool and interesting:

- I setup a build system that automatically triggers when I push new code to GitHub on a raspberry pi which hosts a local container registry.

- I setup a local container registry on my Raspberry PI

- I setup dynamic DNS so that my digital ocean server can talk to my raspberry pi even though my IP address is not static. I'll walk you through how to do this.

- I setup my digital ocean server to automatically pull the latest docker image when my container registry updates using watch tower

- I setup a self hosted analytics system that's way more simple than google analytics, called Plausable

- My blog is also somewhat cool intrinsically since it parses markdown into static pages that load very fast, but I'll link you to my inspiration rather than writing a whole writeup on this.

Github Actions and simple CI

I will start with my automated build system and my inspiration for it. I have used a few different CI systems over the years, mostly Gitlab CI, which is honestly awesome, but it's not only OP for this project, but I'm also not using gitlab to host the code, so I figured why not play with GitHub web-hooks since all I really need is a way to trigger a docker build when new code is pushed.

How does this work?

so basically you will need to write a simple web server for this. I'm using Python Flask for this. This server just needs to handle a single route which you set. And this route will get called by GitHub whenever you push code to the project you configured it for. All I do in this route is validate that GitHub was in fact the caller.. and then I call a local script which setups the docker build.

This is what my CI server code looks like:

from flask import Flask, request, abort

import hmac

import subprocess

app = Flask(__name__)

GITHUB_SECRET = '<replace-with-your-secret>'

@app.route('/blog-webhook', methods=['POST'])

def webhook():

signature = request.headers.get('X-Hub-Signature')

sha, signature = signature.split('=')

# we create a digest using hmac to validate that github was the caller

# we set a shared secret which is stored in GITHUB_SECRET. If our secrets validate

# then we continue.. otherwise we abort with a 403

mac = hmac.new(bytearray(GITHUB_SECRET , 'ascii'), msg=request.data, digestmod='sha1')

if not hmac.compare_digest(mac.hexdigest(), signature):

abort(403)

# call our local shell script which pulls our git and builds a new docker version.

subprocess.call(['./blog-deploy.sh'])

return 'OK', 200

if __name__ == '__main__':

app.run(host="0.0.0.0", port=5000)Note we could just run this from a shell, but that would only run as long as your shell is open, so we need a way to have this server remain up. The advanced way to do this is using uWSGI which I think acts as a revers proxy (someone correct me if I'm wrong). But in our case we are going to do this the simple way which is just creating a service which will auto restart when our system restarts. we'll use systemd

sudo vi /etc/systemd/system/my_python_service.service[Unit]

Description=My Python Script Service

After=network.target

[Service]

Type=simple

User=YOUR_USERNAME

WorkingDirectory=/path/to/your/script

ExecStart=/usr/bin/python3 /path/to/your/script.py

Restart=always

RestartSec=10

[Install]

WantedBy=multi-user.targetDescription: A brief description of your service.After: This ensures the network is available before starting your script.Type: The type of service.simpleis commonly used for services that don't fork.User: The user that will run the script.WorkingDirectory: Directory where your script resides.ExecStart: Command to start your service.Restart: This will restart your service if it exits for any reason.RestartSec: Time to sleep before restarting the service.

Once you've saved and exited the editor, you'll need to inform systemd of the new service:

sudo systemctl daemon-reloadEnable and Start Your Service

Now, enable your service so that it starts on boot:

sudo systemctl enable my_python_service.serviceAnd start it:

sudo systemctl start my_python_script.serviceCheck the Status of Your Service

You can check the status of your service with:

sudo systemctl status my_python_script.servicenow that we have a service for our hook we have assurance that this server, assuming it's stable, will remain up. Because, in my case, this server is running on my Raspberry Pi on my home internet.. I have to setup port forwarding to this web server since it's running behind NAT.

A Quick Asside on NAT

I know that thee's probably a verity of experience levels to my readers of this article. feel free to skip over this if you already know about NAT. NAT stands for Network Address Translation. Basically all routers use NAT that connect to IPv4. The reason for this is because there are only around 4 billion IP v4 address and there are significantly more than that number of devices on the internet. Our local network can have lots of devices on it, but to the external internet it looks like all of these devices are one IP. So what happens is whenever a service needs to conect to a specific computer on your network... you have to tell your router to forward that specific traffic to a specific computer on a specific port. This is called port forwarding. So in our case we need to forward all traffic on port 5000 to our Raspberry Pi. Note that port forwarding can open your network up to attack and vulnerabilities, so be cautious when you do this and seek advice from others.

What Does Our Deploy Script Look Like?

#!/bin/bash

cd /route/to/your/blog/repo

# Fetch the latest tags and commits from the repo

git fetch --all --tags

# Checkout the commit associated with the latest tag

latest_tag=$(git describe --tags $(git rev-list --tags --max-count=1))

git checkout $latest_tag

# Get the latest Git tag (redundant but kept for clarity)

git_tag=$(git describe --tags)

# If there's no tag for the current commit, you might want to handle this case.

# For this example, I'll default to a "latest" string. You can adjust as needed.

if [ -z "$git_tag" ]; then

git_tag="latest"

fi

# Build our docker container

docker buildx build --platform linux/amd64 -t localhost:5001/my-blog:$git_tag -t localhost:5001/my-blog:latest . --pushSo this above script is pretty cool and far from perfect.. but it essentally pulls our git repo. It assignes a var git_tag with the latest git tag. And it uses docker buildx to build an x64 image on our arm64 Rasberry Pi, since my digital ocean runs x64 archotecture. Note when building cross archotectures like this it massively slows down the build speed since we are essentally emulating an x64 machine. Here's a resource to get buildx working on your ubuntu rasberry pi working since this is not the standard docker build.

Lets Setup Our Container Registry

So I wanted to setup a local docker container registry that my Digital Ocean server can request containers from. This is both cool, and saves money, since I don't want to spend $6 a month on a docker account. So here's the docker compose file to self host on our Rasperry Pi... or really an server you choose that runs docker:

services:

registry:

image: registry:2

ports:

- '5001:5000'

environment:

REGISTRY_STORAGE_FILESYSTEM_ROOTDIRECTORY: /data

volumes:

- ./data:/dataNote that you need to make a directory callded data in the same folder as your docker-compose.yml which will persist your docker images.

Let's quicly lock down our server

This is by no means an exhostive list of security mesures, but with all things secruity it's a risk assesment risk benifit analysis. In my case if someone pops my rasperry pi it's not the end of the world. Because of this we are not going to go to the lengths we could in another context.

Let's setup our firewall rules using UFW

UFW is a pretty simple and strightforward utility on ubuntu (and maybe other linux distros) that allows us to set up rules for what ports are open and what ones are not. Note that the ports that we open up are essentally the attack space of our server. Ideally we want as few open as possible. In our case we need to open up port 5000 to the greater internet for the GitHub webhook to be able to connec to our server. We also need to open up our docker repository port, but this one we can lock down to a spicific IP since our digital ocean server should be the only thing connecting to this service.

first we need to run this command to see if ufw is enabled.

sudo ufw statusIf it's not active, you can enable it with:

sudo ufw enableYou'll probably want to keep SSH open unless you are directly phisically connected to your Rasperry Pi

If you port forward to this port.. you may want to either lock this to only allow connecton from a spicific IP or at the very least only allow connections from an SSH key, since this definetly presents a big security vulnerability. I don't expose my PI on port 22 on the wider internet, since I'm only really working on this while I'm at home.

sudo ufw allow 22/tcpLet's allow connection to port 5001 (our docker container registry) only from a spicific IP

sudo ufw allow from [SPECIFIC_IP] to any port 5001 proto tcpNow let's open our web hook server up to the whole internet. We don't need to open UDP since this will only work with TCP for right now.

sudo ufw allow 5000/tcpNow let's reload our ufw to enable these rules:

sudo ufw reloadWe can now validate that our rules where applied properly by running:

sudo ufw statusAnd done. You have now sucessfully locked your Pi's firewall down. There's a lot more you can do to harden your sever to attack, and this is really the start, but this is a very important step. Obviously feel free to add more open ports as needed.

Now lets talk about DNS and DDNS

So if you are like me.. you don't have a static IPv4 address at home, which means that hosting any data accessible to the wider internet is difficult. That is until you realize what DNS does. DNS (Domain Name System) translates human readable addresses... like google.com into an IP address either IPv4 or v6. What's so powerful about this is it means you can have a dynamic IP on a standard ISP internet service and still be able to host a service that talks to the outer internet. The way this works is surprisingly simple: You pay for a domain name through whatever domain registrar you want... doesn't really matter. Then you can use a service like Digital Ocean (or certainly others) to manage your DNS A record. Your DNS A record is simply a record that store the IP address you'd like to associate with your domain or sub domain name. For instance awhb.dev currently points to -> 143.110.153.56 so this IP is stored in my A record. So whenever a computer requests awhb.dev the computer requests the A record from the DNS server which then sends back 143.110.153.56. There's certainly more complexity to DNS and how it works, but we'll let this high level conceptual understand suffice for our needs right now.

With this understanding in mind we can now describe how Dynamic DNS works. All dynamic DNS does is it tells your DNS server (in my case Digital Ocean) what your current public IP address is and assoceates that with a domain or subdomain name makeing it so that your home IP address is assoceated by a domain name. This service has to do this check and update loop repeatedly because your ISP can change your IP address whenver they want... so to keep your server reliable this process should run at least every 10 minutes. that way at most your server will be innaccassable for at most 10 minutes. What's awesome is this Dynamic DNS can be configured extremely easy using this insanely awesome project.

If you're in the same situation as I am, you likely don't have a static IPv4 address at home. This presents a challenge when you're trying to host data that you want accessible to the wider internet. But once you understand the function and power of the Domain Name System (DNS), this challenge becomes much more manageable.

The Domain Name System, or DNS, serves as the internet's phonebook. It translates human-readable web addresses, such as google.com, into numerical IP addresses, which can be either IPv4 or IPv6. The significance of this system is profound: even if you have a constantly changing or dynamic IP address provided by a standard internet service provider (ISP), you can still host a service and make it accessible to the broader internet.

Here's how it works in basic terms:

- Purchase a Domain Name: First, you'll need a domain name, which you can buy from any domain registrar. The specific registrar you choose isn't crucial.

- Managing DNS Records: Services like Digital Ocean (among others) allow you to manage your domain's DNS records, particularly the 'A record'. This A record is essentially a log that stores the IP address you want to link with your domain or sub-domain name. As an example, my domain

awhb.devcurrently maps to the IP address 143.110.153.56. (pleas don't hack me 😱) This IP is what's listed in my domain's A record. - DNS Query Process: Whenever someone tries to access

awhb.dev, their computer doesn't initially know where to go. It asks a DNS server for the corresponding A record. The DNS server then replies with the stored IP address (in this case,143.110.153.56), and the computer can then communicate with the server at that IP address. This is a simplification, but it captures the essence of how DNS facilitates web communication.

Now, with this foundation in DNS, let's explore Dynamic DNS (DDNS) and its relevance to those with dynamic IPs.

Dynamic DNS is a service that automatically updates your DNS server with your current public IP address. It keeps your domain name (like awhb.dev) consistently linked to your changing home IP address. This is crucial because ISPs can change your IP address without notice. For reliability, the DDNS service regularly checks and updates the IP address associated with your domain. Ideally, this check-update cycle should occur every 10 minutes to ensure that, even if your IP changes, your server will at most be inaccessible for a brief period.

Setting up Dynamic DNS might sound complicated, but there are tools available that make the process straightforward. For instance, there's an excellent project on GitHub called ddns-updater that streamlines the setup. All this service is doing behind the scenes is periodically checking what your current public IP is. It saves what it last was in a local JSON file and if it changes compared to the last time it checked it updates your DNS A record for your domain or sub domain.

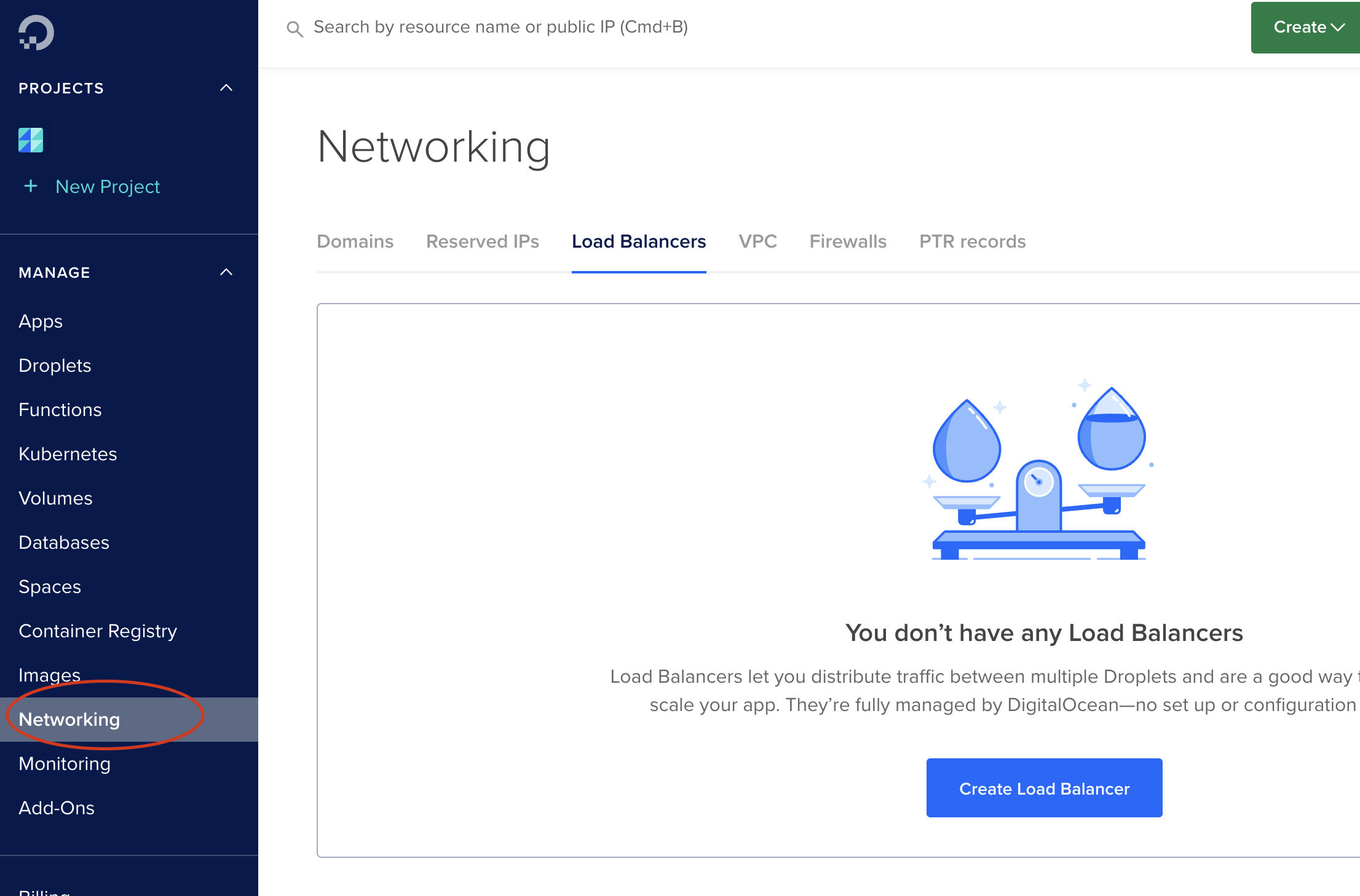

First thing's first.. let's go to our network tab

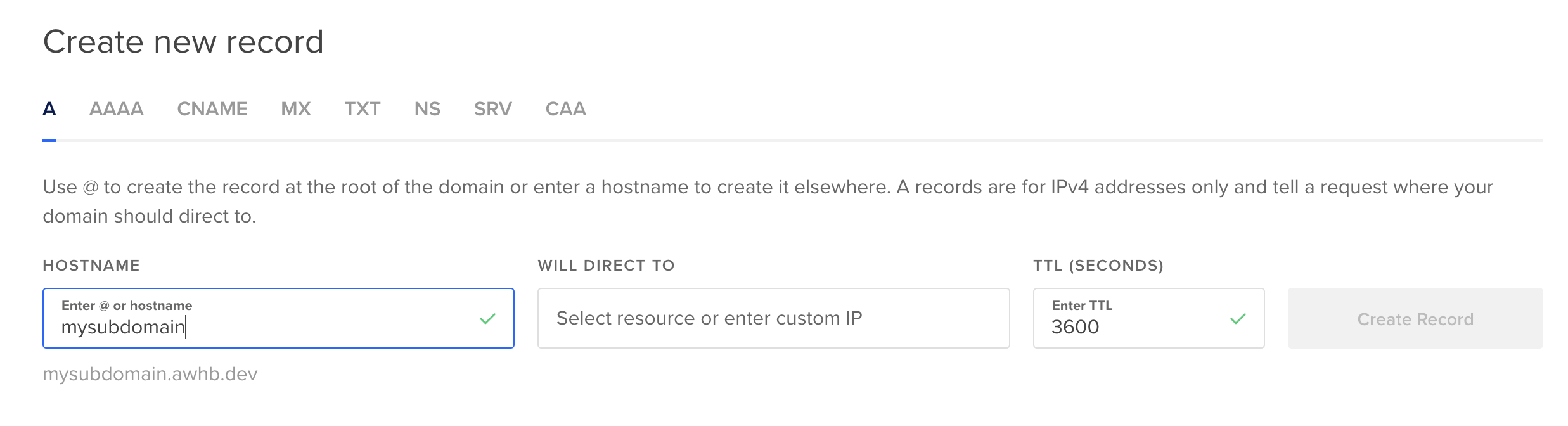

Next we need to either add a top level domain or a dub domain to associeate our DDNS IP to.

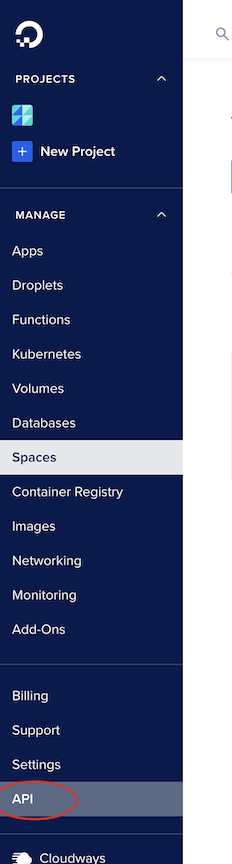

Next we need an API key in order to update our record from our Rasberry Pi

after you've generated an API key be sure to keep it safe, because this has access to your Digital Ocean account. Someone could do a lot of damage to your account with this key.

next we need to create a config.json file where we'll store our config for ddns-updater

{

"settings": [

{

"provider": "digitalocean",

"domain": "example.com",

"host": "sub",

"token": "my-digital-ocean-key",

"ip_version": "ipv4"

}

]

}so in the above example let's say you're trying to update the A record for sub.example.com

if you're are trying to just update example.com I think host would be @ be sure to review the docs for your spicific use case.

so we need to store the above config.json file in a folder which I'll call data.

to start our docker servcie to start this service we run:

docker run -d -p 8000:8000/tcp -v "$(pwd)"/data:/updater/data qmcgaw/ddns-updaterwe are assuming your data dicrectory with config.json is in a folder called data in your current working directory. we are also running this service on port 8000 which has a web UI. Feel free to not expose the web UI. I don't really use it myself, but it does give you status updates on your DDNS records.

I probably have more to go over, but I'll save that for a future post since this one is getting long already.